Intro

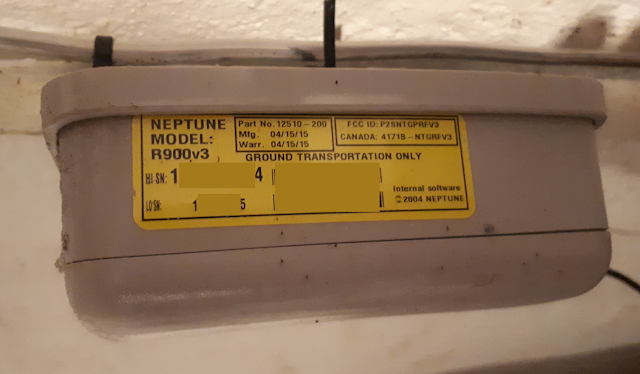

In my previous post about reading my smart water meter in Home Assistant, I left you on a cliff hanger. You don't really need to go back and read that post if you haven't already. Just know that I'm reading my smart water meter using a USB SDR dongle and a tool called rtlamr, (and a wrapper for that tool called rtlamr2mqtt). While I seemed to be able to read the meter, and the ID matched the one printed on the label, there was a drift between the two readings.

Also know that you really don't need to read this post. Of everything I've written for this blog, this may be the most absurd self indulgent post yet. I can guarantee you, that no one will find the contents of this post useful.

Figuring out the offset

My initial readings from the SDR were 157815, while the physical meter read 268770. I had a suspicion that the SDR would have to be multiplied by 10, because the physical meter had an odometer style dial for all the digits down to the 10s digit. The 1s digit was a permanent 0, and then there was a clock style dial, which I assumed was the 1s digit.

I just sort of assumed that the SDR version of this number would also have a resolution of the 10s digit, but I hoped it was better. It was also possible it was much worse. The water company bills me by the 1000s of gallon. If I understood the meter, that would be the white dials. It was entirely possible the SDR was only broadcasting that number. I also wasn't sure what kind of time lag there would be between usage and the SDR reading changing. I was getting readings pretty constantly, about once a minute, maybe more, but those readings didn't seem to change when I was actually using water.

After the first night I could see the SDR meter updating about once an hour. Not ideal, if I was going to use this to help detect leaks, but still better than nothing.

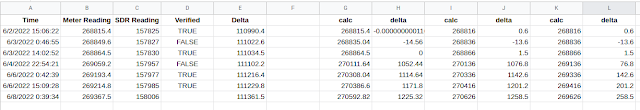

I would wait until a time when we hadn't used much water, and then record the physical meter. If the SDR didn't update for at least an hour before and after the time I read the physical meter, then I assumed that SDR reading corresponded to the physical reading I had. My plan was to do that about once a day for a while and then try to find the offset. I figured with just two pairs, I should be able to find the equation of the line through those points and that ought to be enough to convert between the two. That is, as long as the SDR was increasing in a linear way.

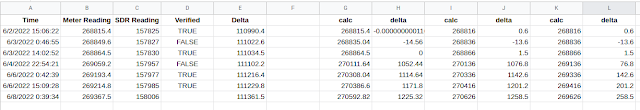

After a day I had these two readings. With both I verified that the SDR reading hadn't changed for a while around the time they were taken, both around 3pm.

6/2: 268815.4 157825

6/3: 268864.5 157830

Solving that line gave me this formula to convert the SDR reading to the physical reading: physical = 9.82 * SDR - 1281026.1. Looking at the multiplier of 9.82 I realized that confirmed my suspicion that the SDR reading was for 10 gallons, and that the factor should just be 10, the only reason it wasn't was the error in the SDR value, since that was only accurate to 10 gallons. I simply used 10x and found the offset and that gave me the slightly updated formula of: physical = 10 * SDR - 1309434.

And with that, I was pretty much done...

Discrepancies

6/3 was a Friday, so I didn't get a chance to get a good reading over the weekend, but come Monday I took another reading to confirm my formula worked. On the afternoon of 6/6 I read the physical meter at 269214.8, and the SDR reading was 157985. Plugging the SDR value into my formula gave: 10 * 157985 - 1309434 = 270416. Which is 1201.2 gallons too high.

Now this was damn peculiar. I wouldn't have been surprised to see some error, but 1200 gallons was way too much. The fact that it was close to 1000 made me suspicious that perhaps I had read something wrong. Luckily I had taken pictures of each physical meter reading. I double and triple checked everything, but got the same results.

I went back and looked at the graph of SDR readings. There was a clear jump at about 11:30am that day. The previous change was at 3am and the SDR value at that time was 157849, then at 11:30 that changed to 157952. Note that just looking at the last two digits the value went from 49 to 52. Those are 10s of gallons so 30 gallons used. That seemed plausible, albeit still a bit high. I began to wonder if the first 4 digits of the SDR number were actually something else, basically two independent numbers just concatenated together. I tried subtracting 1000 from the readings after the jump, and that helped, but they were still off by a lot.

After a week of this I began to lose hope that I'd be able to correct this issue. I tried fitting any curve to these numbers, even though it really didn't make any sense why the meter would go up in a non-linear fashion. I started to rationalize that at least the SDR did seem to increase when we used water, and stay the same when we didn't. At the very least, I could do something with that.

Stumbling Block

You may remember from my first post, that my first stumbling block was reading the default scm meter type, instead of the r900 type I actually had. It turns out this was also my second stumbling block.

I began to be more sure that A. I was not making any mistakes with these readings, and B. this was a common enough water meter that someone else must have also had this issue. After a lot of searching, and just reading through every issue on the github project that mentioned the R900 meter, I finally found this issue.

That was it. Turns out there was another r900 type, called r900bcd (binary coded decimal). I had seen this type, but figured if I was able to read my meter using the r900 type that meant that was what I had. I never thought that the r900bcd type would also be able to read my meter, but produce different outputs.

Sure enough I changed the type to r900bcd, relaunched the program, and the readings matched the physical meter exactly (up to the 10s digit). This was both a huge relief and super annoying that I wasted so much time on what was essentially the same problem I already had.

Either way, I was happy to have accurate water usage data, to within 10 gallons and approximately an hour. I could definitely use that to detect leaks while we were away or asleep. But now I had a new problem. I had already collected a week's worth of priceless data. What was I to do with these data points, which through no fault of their own, had been encoded incorrectly?

Well, if you'd like a snack now is the time to get one, because this is the part where the blog post gets good. If you're somewhat annoyed at having read a 1000 word essay, which was essentially a preamble to the actual purpose of this post, please remember that you were warned earlier.

Binary Coded Decimal

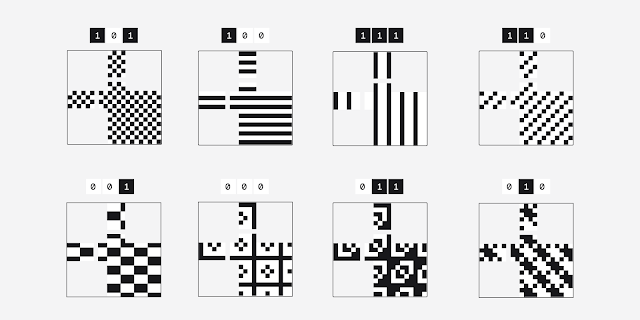

"What is Binary Coded Decimal (BCD)" you almost certainly do not ask yourself? Well it's a system where instead of just converting a full number into binary, you instead take each individual decimal digit and convert it into binary. 4 binary digits let you express all the numbers from 0 to 9, so you can represent each decimal digit with 4 binary digits. Why would we do that? It does have some advantages when expressing numbers which could grow quite large, and we don't want to lose any precision. Dates and times are a good example. We could (and often do) convert a time into just the number of seconds since X, and then store that as a big binary number, but using BCD lets us encode each digit in a date exactly.

Converting decimal numbers into BCD

Now I have these values which seem all over the place, but are actually just numbers which were converted from binary to decimal, when they should have been converted from BCD into decimal. No information has been lost, I simply need to figure out how to correct the encodings of these numbers. Though, it took me a while to even wrap my head around what set of operations I wanted to perform.

After thinking it through for a while, and doing some trial and error with online calculators, I figured it out. I had to convert my decimal values back into (normal) binary. I had to then break that binary number up into groups of 4 digits each. And then, convert each of those groups of 4 binary digits back into a decimal number. Finally, I could just concat those decimal digits into my correct value.

From about a week I didn't have that much data to correct. I could have very easily written a little script to iterate over each value and convert it for me. I didn't need to do this in SQL, and in fact I wasn't even sure I could do this in SQL. But I was pretty sure SQL could convert decimal to binary and back again, and that (plus a bunch of string manipulation) was really all I needed.

The Query

So without further ado, here is the monstrosity of a SQL query I wrote to convert values which had been incorrectly converted from BCD to decimal as if they were simple binary numbers.

I'll attempt to walk you through it, but if you don't know SQL you might as well just skip ahead. I start with a subquery, as I like to do. This subquery is just trying to convert the numbers from decimal back to binary. This is the part doing work:

select right((state::int / 10)::bit(32)::varchar, 20) as binary_num

First it has to divide by 10, then convert to binary, then cast as a string and just take the last 20 digits (4 bits * 5 decimal digits = 20 bits in BCD). Now that I have that BCD string, all I have to do is split it up into groups of 4 bits. Each

SUBSTRING(binary_num, 1, 4)::bit(4)::int::varchar

Is grabbing one group of 4 bits, converting it from a string to a binary number, then to a decimal digit, and then back to a string. And then I just concat those together, convert that back to an int so that I can multiply that by 10, and that is my corrected value.

With that I had a bunch of lines of the ids that had to change and the values they had to change to. Now, despite having written that query, I can never remember how to write an insert or update that uses a subquery off the top of my head. Instead I just copied the results of the select into Sublime, and used my favorite text editor feature of all time, multi-line cursor. I just wrote an update statement for each line all at once and ran those few hundred statements together in a transaction.

Result

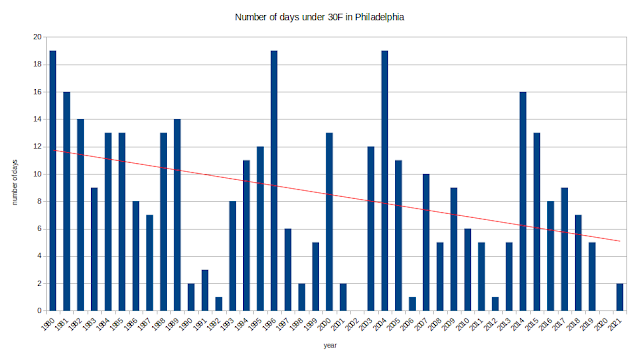

And with that, all was right. I had my priceless data, and could admire my graphs to my heart's content. I'll leave you with this graph of the original vs the corrected data for your viewing pleasure.